Product

SESL (Simple Expert Language System) is a deterministic, rule-based expert system language that turns structured content into reliable, traceable decision logic. It is designed for tasks where explainability, consistency, and auditability are critical.

When used with Large Laguage Models (LLMs), SESL enables organisations to overcome the limitations of generative AI by embedding deterministic expert logic within flexible natural language workflows. This hybrid approach combines the strengths of both expert systems and generative AI, delivering reliable outcomes while retaining the adaptability of LLMs.

The following gives an insight into SESL and the product roadmap for SESL’s product capabilities.

Deterministic

SESL uses an advanced rules engine that produces the same result every time — no randomness, no hallucinations, no inconsistencies. SESL integrates with most AI platforms to convert documents or text into SESL rules.

Repeatable

However many times you run the model SESL will always return the same output for the same input. You can define many different scenarios to test against the rules in your model and be sure of consistent results.

Explainable

SESL uses a human-friendly language which is simple and easy to read. When you run a model SESL provides detailed rule-level trace output showing exactly how a result was reached allowing you to precisely review and audit any decision.

SESL Language & CLI

The SESL Language is the core of the SESL ecosystem — a deterministic, structured rule language that transforms written policies, regulations, or operational decision flows into fully executable logic.

The language is intentionally stable, readable, and extensible, ensuring that rules authored today remain compatible in the long term. This stability allows organisations to build industry solutions on top of SESL without worrying about breaking changes or unpredictable behaviour.

The SESL language provides a complete environment for developing and testing expert system models. Users can execute scenarios, validate rule behaviour, inspect fact changes, and view detailed trace explanations. While the language itself is largely complete, future enhancements will introduce improved tools for rule navigation, bulk scenario management, and richer visualisation of model execution.

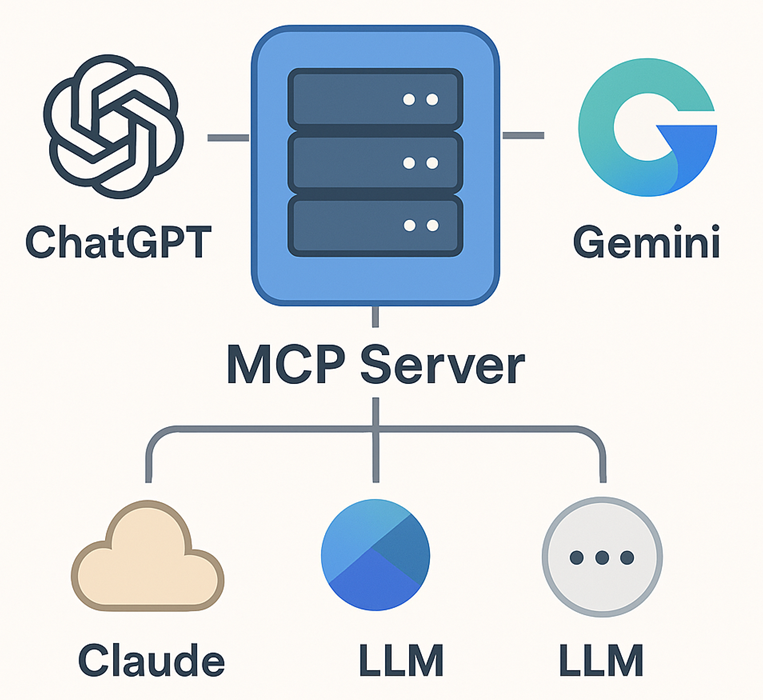

SESL connects to LLM AI (MCP)

Using AI tools like chatGPT has become commonplace for many knowledge workers. While its easy to generate SESL models from LLM tools, the SESL MCP (Model Context Protocol) interface provides a real-time connection between SESL and LLM Tools such as ChatGPT, Claude, and Gemini.

Through MCP, users can generate SESL rules directly from documents or websites, ask questions of SESL models, run the models and embed deterministic expert logic inside natural language workflows. This bridges the gap between explainable expert systems and flexible generative reasoning.

MCP is currently already available as a demonstration service and will be rolled out into production in Q1 2026.

SESL Platform

The SESL Platform is being developed as a comprehensive SaaS management layer for creating, testing, deploying, and governing SESL models. It provides a unified interface for configuring MCP servers, orchestrating models, managing version libraries, and monitoring execution activity at scale. Beyond core model operations, the platform will also support auditing, access control, team collaboration, and more.

The platform will additionally include an industry template marketplace where partners can publish SESL solutions. These templates can be reused and monetised—similar to an app store—enabling the growth of specialised SESL ecosystems designed for common business processes, proprietary knowledge (IP), government workflows, and highly regulated or expert-driven industries.

The platform will be released in early Q1 2026

SESL API

The SESL API provides a production-ready interface for executing SESL models at high volume and low latency.

Designed for operational systems, the API allows applications to pre store models which can be called repeatedly, making it ideal for compliance checks, financial assessments, eligibility engines, workflow automation, and other transactional logic.

With usage-based billing, the SESL API is optimised for scenarios where large throughput, fast responses, and consistent outputs are essential. Future API updates will introduce model lifecycle management endpoints, richer trace delivery options, and improved integration patterns for enterprise developers.

SESL API will be released in Q2 2026

Dedicated SESL Servers

Dedicated SESL servers provide isolated, high-performance environments for organisations that require strict security, predictable throughput, or regulatory-grade operational controls. These servers can support continuous or high-frequency model execution and can be configured to meet the needs of mission-critical systems where consistency is mandatory.

With full operational monitoring, auditing, and model allocation management, dedicated servers give enterprises the confidence to run SESL at scale. Whether supporting compliance decisions, operational scoring, or domain-specific regulatory evaluations, dedicated SESL infrastructure ensures reliability, traceability, and long-term governance.

Dedicated Servers will be released in Q2 2026